Blocks in Objective-C are super useful for making your object-oriented code a bit more functional. But as blocks are an extension to the C language, they have to play by the rules of C, so the syntax is a little obscure, and the documentation can be a little hard to find. So here’s a guide on how to declare blocks so you can use them in various scenarios.

Update: It didn’t work out, but all the content from that blog has been merged back here.

Starting over is often a difficult, but necessary, way to revitalize yourself. When I was in middle school, I wrote a column in my school’s student newspaper talking about video games. I’ve been writing since before I was coding, but lately the coding has superceded the importance of writing in my life. I haven’t been content with this for a long time, and have been trying a variety of strategies to make myself write more. My personal blog, SteveStreza.com, has acted somewhat as the outlet for this, and it has succeeded in getting me to write long-form articles, but it has largely failed at producing shorter and more frequent content. But the opposite side of that is a lack of focus and a burden of further long-form content. I love writing, but the hole in what I have been writing has been bothering me for awhile.

So today, I’m beginning a new experiment, Informal Protocol. This new blog is focused around the topics of development, design, tech, and culture. The goal is to keep most articles at 5 paragraphs or fewer, and to have at least one new post a day. But as the name implies, this is an informal protocol, and won’t always be followed. Quantity and quality will have peaks and valleys, and the focus may skew one way or another. It’s wholly possible the direction may drift and this becomes something else entirely. But hey, sometimes you just have to give it a shot.

Informal Protocol is an experiment. Like all experiments, it may fail. But sometimes you have to just jump face first into a new adventure and start over. If you would like to join me on this adventure, you can follow new posts at Informal Protocol via RSS, App.net, or Twitter.

One of JavaScript’s greatest strengths is its extremely powerful function syntax. You can use it to encapsulate portions of code, large or small, and pass these invocations around as any other object. node.js takes this and builds an entire API on top of it. This power is great, but unchecked, it can lead to convoluted and complex code, the “callback spaghetti” problem. Here’s a sample JavaScript function which chains together some asynchronous APIs.

function run() {

doSomething(function(error, foo) {

if (error) {

// handle the error

} else {

doAnotherThing(function(error, bar) {

if (error) {

// handle the error

} else {

doAThirdThing(function(error, baz) {

if (error) {

// handle the error

} else {

// finish

}

});

}

});

}

});

}async.js tries to fix this by providing APIs for structuring these steps in a more logical fashion while keeping the asynchronous design pattern that works so well. It offers a number of control flow management tools for running asynchronous operations serially, in parallel, or in a “waterfall” (where if any step has an error, the operation doesn’t continue). It also works with arrays, and can iterate over items, run map/reduce/filter operations, and sort arrays. It does all of this asynchronously, and returns the data in a structured manner. So the above code looks like this:

function run() {

async.waterfall(

[

function(callback) {

doSomething(function(error, foo) {

callback(error, foo);

});

},

function(foo, callback) {

doAnotherThing(function(error, bar) {

callback(error, foo, bar);

});

},

function(foo, bar, callback) {

doAThirdThing(function(error, baz) {

callback(error, foo, bar, baz);

});

}

],

function(error, foo, bar, baz) {

if (error) {

// one of the steps had an error and failed early

} else {

// finish

}

}

);

}While the resulting code may be a little longer in terms of lines, it has a much more clearly defined structure, and the error cases are handled in one place, not three. This makes the code much more readable and maintainable. I love this pattern so much, I’ve begun porting it to Objective-C as IIIAsync. If you’re a JavaScript developer, and you use function for its more advanced use cases, you want to use async.js.

My Giant Hard Drive: Building a Storage Box with FreeNAS

UPDATE 2/20/2015: This build failed after about 15 months, due to extensive drive failure. By extensive, I mean there were a total of 9 drive replacements, before three drives gave out over a weekend. This correlates closely to data recently published by Backblaze, which suggested 3 TB Seagate drives are exceptionally prone to failure. I’ve replaced these with 6 HGST Deskstar NAS 4TB drives, which were rated highly, and are better suited for NAS environments.

For many years, I’ve had a lot of hard drives being used for data storage. Movies, TV shows, music, apps, games, backups, documents, and other data have been moved between hard drives and stored in inconsistent places. This has always been the cheap and easy approach, but it has never been really satisfying. And with little to no redundancy, I’ve suffered a non-trivial amount of data loss as drives die and files get lost. Now, I’m not alone to have this problem, and others have figured out ways of solving it. One of the most interesting has been in the form of a computer dedicated to one thing: storing data, and lots of it. These computers are called network-attached storage, or NAS, computers. A NAS is a specialized computer that has lots of hard drives, a fast connection to the local network, and…that’s about it. It doesn’t need a high-end graphics card, or a 20-inch monitor, or other things we typically associate with computers. It just sits on the network and quietly serves and stores files. There are off-the-shelf boxes you can buy to do this, such as machines made by Synology or Drobo, and you can assemble one yourself for the job.

I’ve been considering making a NAS for myself for over a year, but kept putting it off due to expense and difficulty. But a short time ago, I finally pulled the trigger on a custom assembled machine for storing data. Lots of it; almost 11 terabytes of storage, in fact. This machine is made up of 6 hard drives, and is capable of withstanding a failure on two of them without losing a single file. If any drives do fail, I can replace them and keep on working. And these 11 terabytes act as one giant hard drive, not as 6 independent ones that have to be organized separately. It’s an investment in my storage needs that should grow as I need it to, and last several years.

Building a NAS took a lot of research, and other people have been equally interested in building their own NAS storage system, so I have condensed what I learned and built into this post. Doing this yourself is not for the faint of heart; it took at least 12 hours of work to assemble and setup the NAS to my needs, and required knowledge of how UNIX worked in order to make what I wanted. This post walks through a lot of that, but still requires skill in system administration (and no, I probably won’t be able to help you figure out why your system is not working). If you’ve never run your own server before, you may find this to be too overwhelming, and would be better suited with an off-the-shelf NAS solution. However, building the machine yourself is far more flexible and powerful, and offers some really useful automation and service-level tools that turn it from a dumb hard drive to an integral part of your data and media workflows.

I grew up knowing how to play music. My parents started me on piano lessons when I was very young, and played violin in middle and high school. When I was in college, I taught myself how to be a human beatbox. Lately, my tastes in music have turned more towards the electronic spectrum; perhaps a fitting choice, given my software engineer background. For years, I’ve owned a copy of Apple’s Logic audio production app (Logic Express 8, and recently upgraded to Logic Pro 9). And while I had played with it on and off, I never really finished anything, due both to a perceived lack of skill and a lack of confidence in ability to actually make something worthwhile.

Fast forward to a time when the Korean pop song Gangnam Style by PSY has taken the Internet by storm, racking up a hundred million views in a few weeks. Mashups of this song came out quickly and by lots of people. I’ve had a fascination with this song since I’d first heard it. That everyone else was getting into mashups of a song I was completely hooked on created an itch in my brain to give it a try. At the very least, I could put some ideas in and see what happened; I was safe in my insecurity about my own ability because I never had to let another soul hear whatever came out.

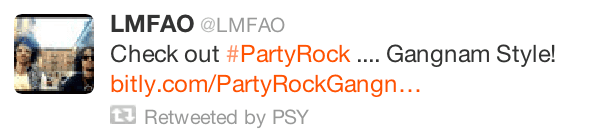

Last weekend was the three-day-long Labor Day weekend, and I had nothing better to do. I spent Saturday putting the song together, combining the K-pop music track with vocals from LMFAO, Dev, the Offspring, and the Bloodhound Gang (as unlikely a combo as you’d expect to find in a mashup). I posted it to SoundCloud and got a bunch of positive feedback along with the constructive criticism. Over Sunday I tweaked a few things in the song. And on Monday, I decided to try and put a video together.The whole time my brain was fighting me to stop, to give up, to pretend I’d never tried, and forget it ever existed. But I kept pushing, and out came my first real mashup ever. And here it is.

Apologies to those in Germany, where the video is blocked for copyright reasons. You can download or stream the MP3 or the AAC audio for now.

I put this up on the Internet, tweeted a link, and waited for feedback. I wasn’t expecting much to happen; my wildest expectations were that it might get 10,000 views and maybe a link on Reddit’s mashups community. Barely even a splash. I had no delusions of grandeur about its value; I just wanted to play with some ideas, get a little experience, and pull this splinter out of my mind.

I was wrong. Very, very wrong. Once you put something on the Internet, you lose any control over what happens to it. Within an hour, my mashup had gotten posted to Buzzfeed where it quickly started to gain traction. Within 12 hours, it had grown to 8,000 views like it was nothing. Within 24 hours, that number shot past my crazy hopeful dream to 16,000. It hit Mashable and The Daily What. That number grew to 34,000. 44,000. 88,000. Blogs and news sites started posting it as their viral video of the day. Know Your Meme picked it up. Radio stations around the world started playing it. 104,000. 122,000.

Then, just as it seemed like it was peaking, something utterly unexpected and amazing happened.

Check out #PartyRock … Gangnam Style! bitly.com/PartyRockGangn…

— LMFAO (@LMFAO) September 7, 2012

And a few hours later, it got retweeted by the man himself, PSY, in what may become my favorite screenshot ever.

I never expected anything like this. Not at all. It is an incredible honor to have a mashup work celebrated and shared by an artist you took from, let alone two.

Throughout this whole experience, I’ve been floored by supporters. People on the Internet tend to tell you what they really think while hiding behind the shield of anonymity. So much to my surprise, the response has been phenomenal. There are over 5,000 likes of the video on YouTube, with 26 likes for every dislike. It’s been shared on Facebook and Twitter over 12,000 times; the LMFAO tweet itself got over 1,000 retweets. The audio files I posted on the YouTube page have been downloaded over 6,000 times. The video got hundreds of comments, most of which are positive. And I’ve gotten dozens (if not hundreds) of mentions on Twitter, Facebook, and App.net from friends and strangers who love it.

And the most astonishing thing to me? The mashup itself is catchy, but it’s far from being technically great. There are issues with some parts of the song not mashing well, clashes between keys, mismatched song syncing and beatmatching, issues with the video clips not being perfectly aligned, etc. It’s my first time making a mashup, and it was meant to be a learning experience, not a viral hit with flawless execution. I’ve heard my own song dozens of times, both during and after the process of making it. When you hear your own work that much, and you know exactly how it is pieced together, the faults are not only obvious, they get in your face no matter how much you try to shut them out. But it’s too late to do anything about that now. The Internet has taken the song and given it legs to run. It’s out of my hands, technical merits be damned.

I’m very fortunate to have an audience of thousands of people on social networks to seed ideas to. Many of them fall right on their face. I expected this to, as well. But then that group took it and started a chain reaction which brought this little weekend project to hundreds of thousands. I am deeply grateful for and humbled by the friends and strangers who gave me a week of consistently beaten expectations and holy-crap-I-can’t-believe-this-is-real moments. But most of all, I am more encouraged than ever to push forward and keep this little dream of making electronic music alive. I have a renewed sense of confidence and courage to improve, to try again, to turn a what-if into a reality.

So thank you to those who have offered their support, praise, critique, tweets, shares, likes, upvotes and downvotes. Thank you to everyone who overlooked the technical flaws and offered their appreciation. Thank you to LMFAO, Dev, The Offspring, The Bloodhound Gang, and of course PSY, for being the unwitting participants in a learning experiment, for putting something into the world that I could draw from. Thank you to the bloggers, the writers, the curators, and the DJs who gave me the elation of feeling for just a moment like a rock star. I won’t ever forget it.

Try something that scares the hell out of you. It just might turn into something wonderful.

Retina MacBook Pro Review: The Age of the High-Resolution PC

The desktop UI was invented almost 30 years ago. The original Macintosh had a 9” screen at 72 DPI. The modern conventions of size were established by these constraints; a 20-pixel tall menu bar provided a 2” target for the fairly-precise mouse pointer. These constants have stuck with us throughout the lifespan of the desktop, occasionally getting ever-so-slightly smaller as DPI were added to displays here or there. At its peak, the desktop iMac reached about 108 DPI, with 1.5x the pixel density over the 30 year old Macintosh. The MacBook Pro received more improvement, getting as close as 128 DPI on a souped-up 15” model with a “high-res” display. 30 years of progress brought us to 1.77x pixel density. These improvements were great, and always breathed new life into the desktop, but we never managed to break through to a truly high density display where pixels were indistinguishable. Despite years of hoping, it seemed as if we had started to hit a wall in terms of packing pixels tightly together, one that would keep us stuck forever seeing the boundaries of a pixel.

Meanwhile, the mobile revolution happened. The original iPhone blew all of these displays out of the water, with what was (at the time) a ridiculous 163 DPI display. Those of us who were paying close attention to this DPI knew at the time what a big deal this was. The iPad also brought a great 132 DPI display, still higher than the best Apple could put into any desktop or notebook. EDIT: Apparently the 17” MacBook Pros had a display at 132 DPI, so equivalent to the iPad. Soon after, those displays took their pixel density and had it doubled, a feat of engineering that is astounding. The results were truly something else. For most people, the move to a Retina display was immediately noticeable and improved the experience while reading, looking at photos, watching video, and browsing the web. Hopes were renewed that Apple would eventually take this technology back to its roots and bring a Retina-class display to a Mac.

That day has finally come. At WWDC 2012, Apple officially put their toe into the water of the high resolution desktop with the new Retina MacBook Pro. They took the old MacBook Pro’s display and packed four pixels together in place of each one. On top of that, they took the opportunity to do what Apple does best – get rid of dead technology. Software and media are largely delivered via the Internet, so they could get rid of the heavy, noisy, and large optical drive. Spinning disk drives also got the axe, with tiny solid-state drives taking their place. Wireless networking is pervasive and ubiquitous, far more so than wired networks, so they got rid of the Ethernet port. In their place, they put more USB ports, more Thunderbolt ports, and even an HDMI port. By removing all this bulk, they got the MacBook Pro down to just under 3/4ths of an inch thick, and just less than 4.5 pounds.

The new Retina MacBook Pro is a solid foundation on which to build pro-level notebooks for the next few years, and is in every way an improvement on older notebooks. It has a small share of early adopter issues, some of which will require new software updates, some of which will require waiting until next year’s laptop ships. But as someone who has been waiting for a pixel-free world for the better part of a decade, I couldn’t have wished for a better and more focused product from Apple.

Server teams are made up of the people who write and maintain the code that makes servers go, as well as those who keep that code working. Twitter, Facebook, Instagram, Amazon, Google, and every other service in the world have one or more people in this role. When things go right, nobody notices, and they get no praise. When things go wrong, their phones ring at 3 in the morning, they’re up fixing a new issue, and they’re answering calls from every cog in the corporate ladder who’s screaming about how much money they’re losing. It’s a thankless job.

Diablo 3’s launch has had a number of issues around load. When you have millions of people swarming on a server, ruthlessly trying to log in every few seconds, it causes a huge amount of load. At this scale, things you expect to go right suddenly break in strange and unfamiliar ways. No amount of load testing could adequately prepare the server team behind Diablo 3 for firepower of this magnitude. The way you fix these kind of issues is to look at what’s slow, fix it to make it less slow, and hope it works. Do this until load stops being a problem. Oh, and you have to do it quickly, because every second that goes by people are getting more and more upset. And you can’t break anything else while you do it.

The people who work on the servers for services of any decent scale cope with new problems every day around keeping the thing alive and healthy. The Diablo server team has been moving quickly, solving issues of massive scale in a short time, and getting the service running again. Players notice and yell the service when it stops working, but the response to it and the maintenance of it has been quick and effective.

Next time you’re using an Internet service, or playing a multiplayer game, think about the people who keep it running. If you know any of these people, tell them thanks. They’re the unsung heroes of the Internet.

Smartphones have replaced lots of types of small devices. iOS and Android have made it easy to build apps that perform all kinds of functions, replacing other standalone devices like media players and GPS. It’s been wondered if they would replace handheld gaming devices, and for many people they have. For awhile, I thought they had, at least for my needs. But after trying to play games on touchscreen-only devices for years, I’ve largely felt unenthused about the deeper and more engaging games that would come from big studios. These games required a higher level of precision control that touchscreens just couldn’t deliver.

The PS Vita caught my attention about a month before its launch in the US. It combines a lot of the best features of smartphones with the controls of console games. It has a gorgeous, large, high-resolution touchscreen (and a back panel that is touch-sensitive), as well as a tilt sensor and cameras for augmented reality games. But it also has almost all of the buttons of a typical PS3 controller, including two analog sticks. Sony managed to cram all of this functionality into a device that, while large, is not too big to fit into my pocket, and with long enough battery life for a busy day interspersed with some gaming. The combination of apps and games (which I will describe as just “apps” for the sake of this review) is powerful, and the hardware power and display size make it a compelling device.

2011 is coming to a close, so I’d like to take a moment to highlight a few apps and games on Mac and iPhone that have been invaluable to me. I broke this out into four categories, each with two apps. I have purposely omitted iPad, because frankly, I rarely use my iPad (and I prefer the TouchPad over the iPad), and don’t feel I’ve played with enough iPad apps to really give it a fair shake. So I’ve left that off to focus on iPhone and Mac apps and games. I hope you’ll check out all of these great apps.

Adobe is finally putting an end to Flash Player. They’ve announced they’re stopping development of the mobile Flash Player, which is where the future of tech innovation is heading, and the writing is on the wall for desktop Flash Player as well. This is a good thing for a myriad of reasons, both technical and political.

However, it is important to remember that Flash drove much of the innovation on the web as we know it today. When Flash was conceived over a decade ago, the web was a glimmer of what it is today. Creating something visually impressive and interactive was almost impossible. Flash brought the ability to do animation, sound, video, 3D graphics, and local storage in the browser when nothing else could.

Without Flash, MapQuest would not have been able to provide maps for years before Google did in JavaScript. The juggernaut YouTube would not have been possible until at least 2009, four years after its actual launch. Gaming on the web, which has been around as long as Flash, would only now be possible a decade later. Flash enabled developers to create rich user experiences in a market dominated by slow moving browser developers. Even in 2011 Flash exists to provide those more powerful apps to less tech-savvy people who still use old versions of Internet Explorer.

Flash Player itself seemed like a means to an end. Macromedia, and then Adobe who acquired them, sells the tool that you use to build Flash content. Thus, Adobe’s incentive was not to build a great Flash Player, but a pervasive one that would sell its tools. Its technical stagnation provided a market opportunity for browser developers to fill in the gaps that Flash provided. As a result it has a huge market dominance in tools for building rich apps for the web, tools HTML5 lacks.

This puts Adobe in a unique position. As HTML5 continues to negate the need for Flash Player, Adobe has the tools for implementing Flash within HTML5, and the market eager for those tools. Hopefully this move signals that Adobe will be moving in this direction. Because the web DOES need great HTML5 tools for people who aren’t savvy in JavaScript, especially for the people who used Flash to do it previously.

HTML5 offers developers the ability to build high-performance, low-power apps and experiences. Browser innovation has never been faster; Apple, Google, Microsoft, and Mozilla are all competing to bring the best new features to their browsers in compatible ways. But they’re just now filling in many features Flash Player has had for years. Adobe can harness this to help build a better web, and few others can. Hopefully they seize this moment.